Stable Diffusion (SDXL0.9 & 1.0)

Run a Stable Diffusion Text to Image Job

Overview

Generically, stable diffusion is what happens when you put a couple of drops of dye into a bucket of water. Given time, the dye randomly disperses and eventually settles into a uniform distribution which colours all the water evenly.

In computer science, you define rules for your (dye) particles to follow and the medium this takes place in.

Stable Diffusion is a machine learning model used for text-to-image processing (like Dall-E) and based on a diffusion probabilistic model that uses a transformer to generate images from text.

Getting Started

Prerequisites

Before running sdxl, make sure you have the Lilypad CLI installed on your machine and your private key environment variable is set. This is necessary for operations within the Lilypad network.

Learn more about installing the Lilypad CLI and running SDXL with this video guide.

Run SDXL v0.9 or 1.0

When running SDXL pipelines in Lilypad, you have the choice between using the Base model or the Refiner model. Each serves a unique purpose in the image generation process:

Base Model: This is the primary model that generates the initial image based on your input prompt. It focuses on the broad aspects of the image, capturing the main theme and essential elements. The Base model is faster and uses less computational power.

Refiner Model: This model takes the image from the Base model and enhances it. It refines details, improves textures, and adjusts colors to increase the visual appeal and realism of the image. The Refiner model is used when you need higher quality and more detailed images.

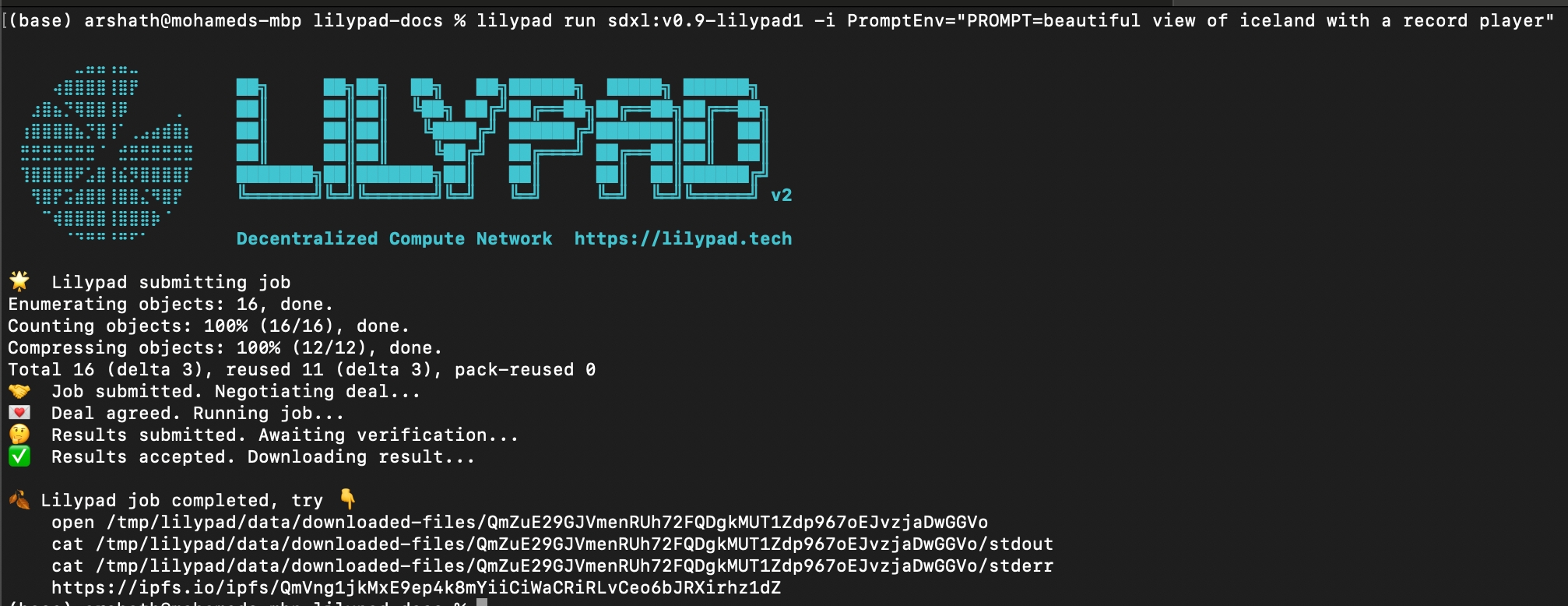

To run SDXL Pipeline in Lilypad, you can use the following commands:

SDXL v0.9

Base:

Refiner:

SDXL 1.0

Base:

Refiner:

SDXL Output

To view the results in a local directory, navigate to the local folder.

In the /outputs folder, you'll find the image:

To view the results on IPFS, navigate to the IPFS CID result output.

Please be patient! IPFS can take some time to propagate and doesn't always work immediately.

As Lilypad modules are currently deterministic, running this command with the same text prompt will produce the same image, since the same seed is also used (the default seed is 0).

Tuning an output

To change an image output, pass in a different seed number:

Lilypad can run SDXL v0.9 or SDXL v1.0 with the option to add tunables to improve or change the model output.

If you wish to specify more than one tunable, such as the number of steps, simply add more -i flags. For example, to improve the quality of the image generated add "Steps=x" with x = (Min: 5. Max: 200):

Options and tunables continued

The following tunables are available. All of them are optional, and have default settings that will be used if you do not provide them.

Prompt

A text prompt for the model

"question mark floating in space"

Any string

Seed

A seed for the model

42

Any valid non-negative integer

Steps

The number of steps to run the model for

50

Any valid non-negative integer from 5 to 200 inclusive

Scheduler

The scheduler to use for the model

normal

normal, karras, exponential, sgm_uniform, simple, ddim_uniform

Sampler

The sampler to use for the model

euler_ancestral

"euler", "euler_ancestral", "heun", "heunpp2", "dpm_2", "dpm_2_ancestral", "lms", "dpm_fast", "dpm_adaptive", "dpmpp_2s_ancestral", "dpmpp_sde", "dpmpp_sde_gpu", "dpmpp_2m", "dpmpp_2m_sde", "dpmpp_2m_sde_gpu", "dpmpp_3m_sde", "dpmpp_3m_sde_gpu", "ddpm", "lcm"

Size

The output size requested in px

1024

512, 768, 1024, 2048

Batching

How many images to produce

1

1, 2, 4, 8

See the usage sections for the runner of your choice for more information on how to set and use these variables.

Learn more about this Lilypad module on Github.

Looking for Smart Contracts?

Check out our smart contracts docs!

Last updated

Was this helpful?